OAuth 2.0 authentication mechanisms, 4.10.1.1. Monitoring Configuring connectors in Kafka Connect in standalone mode, 9.1.3. MBeans matching kafka.consumer:type=consumer-fetch-manager-metrics,client-id=*,topic=*,partition=*, 8.8.1. Kafka's performance is effectively constant with respect to data size so storing data for a long time is not a problem. localhost:9092). Logical Data Modeling OAuth 2.0 introspection endpoint configuration, 4.10.3. There is one partition and one replica. Synchronizing consumer group offsets, 10.4. Status. The older messages with the same key will be removed from the partition. Scaling data consumption using consumer groups, 6.2.5. Instead it might take some time until the older messages are removed. Messages in Kafka are always sent to or received from a topic. OAuth 2.0 client configuration on an authorization server, 4.10.2.2. Data Concurrency, Data Science Instrumenting producers and consumers for tracing, 16.2.3. Linear Algebra

bfr fico calculer normatif

Network Relation (Table) and transaction.state.log.. Cluster configuration", Expand section "12. Configuring ZooKeeper", Collapse section "3. Collection Example client authentication flows, 4.10.6. Enabling TLS client authentication, 4.9.6. Using OAuth 2.0 token-based authentication", Collapse section "4.10. Design Pattern, Infrastructure OAuth 2.0 Kafka broker configuration", Collapse section "4.10.2. Selector

Replication factor defines the number of copies which will be held within the cluster. Upgrading consumers and Kafka Streams applications to cooperative rebalancing, F. Kafka Connect configuration parameters, G. Kafka Streams configuration parameters. Mathematics Terms of service Privacy policy Editorial independence. Using AMQ Streams with MirrorMaker 2.0", Collapse section "10. Automata, Data Type For example, if the retention policy is set to two days, then for the two days after a record is published, it is available for consumption, after which it will be discarded to free up space. Configuring Kafka Connect in standalone mode, 9.1.2. Additionally, users can request new segments to be created periodically. Kafka consumer configuration tuning", Expand section "6.2.5. Upgrading Kafka brokers to use the new message format version, 18.5.5. Configuring OAuth 2.0 with properties or variables, 4.10.2. Delete a topic using the kafka-topics.sh utility. Seeking to offsets for a partition, 14. kafka-topics.sh is part of the AMQ Streams distribution and can be found in the bin directory. Use the kafka-configs.sh tool to delete an existing configuration option. These are used to store consumer offsets (__consumer_offsets) or transaction state (__transaction_state). configured to connect to (default setting for bootstrap.servers: Example of the command to list all topics, Expand section "1. Upgrading to AMQ Streams 1.7", Red Hat JBoss Enterprise Application Platform, Red Hat Advanced Cluster Security for Kubernetes, Red Hat Advanced Cluster Management for Kubernetes, 2.4.1. A topic can have zero, one, or many consumers that subscribe to the data written to it. MBeans matching kafka.streams:type=stream-task-metrics,client-id=*,task-id=*, 8.9.3. Verify that the topic exists using kafka-topics.sh. However, when creating topics manually, their configuration can be specified at creation time. Data Analysis Configuring OAuth 2.0 authentication", Expand section "4.11. Verify that the topic was deleted using kafka-topics.sh. Scaling Kafka clusters", Expand section "7.2. Using MirrorMaker 2.0 in legacy mode, 11.1. Http ---------------------------------------------------------------------------------------------------------------, --------------------------------------------------------------------------------------------------------------, Transforming columns with structured data, Configure ksqlDB for Avro, Protobuf, and JSON schemas. Kafka Connect in standalone mode", Collapse section "9.1. Internal topics are created and used internally by the Kafka brokers and clients. Javascript Configuring OAuth 2.0 support for Kafka brokers, 4.10.6.3. Data Science MBeans matching kafka.producer:type=producer-metrics,client-id=*, 8.6.2. SHOW TOPICS EXTENDED also displays consumer groups

Use --describe option to get the current configuration. Grammar Data (State) Data storage considerations", Collapse section "2.4. Enabling Client-to-server authentication using DIGEST-MD5, 4.8.2. Configuring Kafka Java clients to use OAuth 2.0, 4.11. The replication factor determines the number of replicas including the leader and the followers. Overview of AMQ Streams", Collapse section "1. Apache Kafka and ZooKeeper storage support, 2.5. File System For a topic with the compacted policy, the broker will always keep only the last message for each key. A topic is always split into one or more partitions. Using AMQ Streams with MirrorMaker 2.0, 10.2.1. Data Structure Using AMQ Streams with MirrorMaker 2.0", Expand section "10.2. Additionally, Kafka brokers support a compacting policy. Nominal OAuth 2.0 authentication configuration in the Kafka cluster, 4.10.2.3. Avoiding data loss or duplication when committing offsets", Expand section "7.1. Specify the options you want to add or change in the option --add-config. Reassignment of partitions", Collapse section "7.2. Data Quality The other replicas will be follower replicas. Running a single node AMQ Streams cluster, 3.3. To keep the two topics in sync you can either dual write to them from your client (using a transaction to keep them atomic) or, more cleanly, use Kafka Streams to copy one into the other.

Running multi-node ZooKeeper cluster, 3.4.2. Configuring OAuth 2.0 authentication", Collapse section "4.10.6. Deploying the Kafka Bridge locally, 13.2.2. Using Kerberos (GSSAPI) authentication", Collapse section "14. ZooKeeper authentication", Expand section "4.7.1. Requests to the Kafka Bridge", Collapse section "13.1.2. topics that match any pattern in the ksql.hidden.topics configuration. Cluster configuration", Collapse section "10.2. [emailprotected] Setting up tracing for Kafka clients, 16.2.1. SHOW TOPICS lists the available topics in the Kafka cluster that ksqlDB is Messages in Kafka are always sent to or received from a topic. MBeans matching kafka.consumer:type=consumer-coordinator-metrics,client-id=*, 8.7.4. There's also live online events, interactive content, certification prep materials, and more. The kafka-topics.sh tool can be used to list and describe topics.

Unidirectional replication (active/passive), 10.2.3. Dynamically change logging levels for Kafka broker loggers, 6.2.2. MBeans matching kafka.consumer:type=consumer-fetch-manager-metrics,client-id=*,topic=*, 8.7.6. For example, if you set the replication factor to 3, then there will one leader and two follower replicas. Until the parition has 1GB of messages. Browser Rebalance performance tuning overview, 15.11. ZooKeeper authentication", Collapse section "4.6. For a production environment you would have many more broker nodes, partitions, and replicas for scalability and resiliency. SHOW ALL TOPICS lists all topics, including hidden topics. MBeans matching kafka.consumer:type=consumer-metrics,client-id=*,node-id=*, 8.7.3. Simple ACL authorizer", Collapse section "4.7.1. Engage with our Red Hat Product Security team, access security updates, and ensure your environments are not exposed to any known security vulnerabilities. MBeans matching kafka.producer:type=producer-topic-metrics,client-id=*,topic=*, 8.7.1. Enabling SASL SCRAM authentication, 4.10. Retrieving the latest messages from a Kafka Bridge consumer, 13.2.7. When creating a topic you can configure the number of replicas using the replication factor. Testing Css These topics can be configured using dedicated Kafka broker configuration options starting with prefix offsets.topic. Cruise Control for cluster rebalancing", Collapse section "16. Ratio, Code Using OAuth 2.0 token-based authorization", Expand section "4.11.1. Configuring Kafka Connect in distributed mode, 9.2.2. Using Kerberos (GSSAPI) authentication", Expand section "15. Important Kafka broker metrics", Expand section "8.8. 2022, OReilly Media, Inc. All trademarks and registered trademarks appearing on oreilly.com are the property of their respective owners. Cryptography Data Type Kafka Connect in distributed mode", Collapse section "9.2. MBeans matching kafka.connect:type=sink-task-metrics,connector=*,task=*, 8.8.8. ZooKeeper authorization", Expand section "4.9. One of the replicas for given partition will be elected as a leader. Reassignment of partitions", Expand section "8. Shipping Kafka Streams API overview", Collapse section "12.

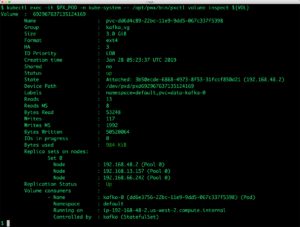

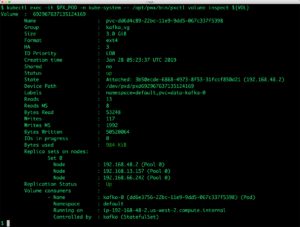

kafka kubernetes portworx statefulset failing MBeans matching kafka.streams:type=stream-processor-node-metrics,client-id=*,task-id=*,processor-node-id=*, 8.9.4. AMQ Streams and Kafka upgrades", Expand section "18.4. OAuth 2.0 authentication mechanisms", Collapse section "4.10.1. Time Partitions act as shards. Kafka Streams API overview", Expand section "13.1. It can be defined based on time, partition size or both. MBeans matching kafka.consumer:type=consumer-fetch-manager-metrics,client-id=*, 8.7.5. The message retention policy defines how long the messages will be stored on the Kafka brokers. For a production environment you would have many more broker nodes, partitions, and replicas for scalability and resiliency. Using OPA policy-based authorization", Collapse section "4.12. Important Kafka broker metrics", Collapse section "8.5. MBeans matching kafka.connect:type=source-task-metrics,connector=*,task=*, 8.8.9. Operating System Data Warehouse Get full access to Apache Kafka Series - Kafka Streams for Data Processing and 60K+ other titles, with free 10-day trial of O'Reilly. It is possible to create Kafka topics dynamically; however, this relies on the Kafka brokers being configured to allow dynamic topics. Subscribing a Kafka Bridge consumer to topics, 13.2.5. Kafka Bridge overview", Expand section "13.1.2. Enabling tracing for the Kafka Bridge, 17.2. For 7 days or until the 1GB limit has been reached. Distributed tracing", Expand section "16.2. ZooKeeper authorization", Collapse section "4.8. Configuring Red Hat Single Sign-On as an OAuth 2.0 authorization server, 4.10.6.2. Data (State) Kafka Bridge quickstart", Expand section "14. Trigonometry, Modeling Upgrading to AMQ Streams 1.7", Collapse section "18.4. Cruise Control for cluster rebalancing", Collapse section "15. To disable it, set auto.create.topics.enable to false in the Kafka broker configuration file: Kafka offers the possibility to disable deletion of topics. Running Kafka Connect in standalone mode, 9.2.1.

api tooling report use reports self help understanding each iii valid usage way Scaling Kafka clusters", Collapse section "7.1. Graph Example of the command to describe a topic named mytopic. Upgrading Kafka brokers and ZooKeeper, 18.5.1. Kafka Bridge quickstart", Collapse section "13.2. Configuring OAuth 2.0 authorization support, 4.12. Fast local JWT token validation configuration, 4.10.2.4. Adding Kafka clients as a dependency to your Maven project, 12.1. Get Mark Richardss Software Architecture Patterns ebook to better understand how to design componentsand how they should interact. Recovering from failure to avoid data loss, 6.2.8. Kafka Connect in standalone mode", Expand section "9.2. Enabling ZooKeeper ACLs in an existing Kafka cluster, 4.9.5. Minimizing the impact of rebalances, 7.2.2. OAuth 2.0 client authentication flow", Expand section "4.10.6. Distance create a topic named test with a single partition and only one replica: Docker Single Node (Multiple Service Broker + Zookeeper), Installation Standalone / Open Source (Single Broker), Kafka Connect - Sqlite in Standalone Mode, https://kafka.apache.org/documentation.html#newconsumerconfigs. AMQ Streams cluster is installed and running, For more information about topic configuration, see, For list of all supported topic configuration options, see, For more information about creating topics, see, Specify the host and port of the Kafka broker in the. Auto-created topics will use the default topic configuration which can be specified in the broker properties file. Statistics Simple ACL authorizer", Expand section "4.8. Please report any inaccuracies on this page or suggest an edit. Data Visualization The kafka-configs.sh tool can be used to modify topic configurations. The leader replica will be used by the producers to send new messages and by the consumers to consume messages. OAuth 2.0 authorization mechanism, 4.11.2. When a producer or consumer tries to send messages to or receive messages from a topic that does not exist, Kafka will, by default, automatically create that topic. Presenting Kafka Exporter metrics in Grafana, 18.4.1. Generating reassignment JSON files, 7.2.3. Use the kafka-configs.sh tool to get the current configuration. Lexical Parser Dimensional Modeling Each partition can have one or more replicas, which will be stored on different brokers in the cluster. Deploying the Cruise Control Metrics Reporter, 15.4. Example of the command to create a topic named mytopic. kafka-topics.sh is part of the AMQ Streams distribution and can be found in the bin directory. Kafka Exporter alerting rule examples, 17.5. Relational Modeling Avoiding data loss or duplication when committing offsets, 6.2.5.1. Process (Thread) Example of the command to change configuration of a topic named mytopic. Using OAuth 2.0 token-based authorization, 4.11.1. MBeans matching kafka.connect:type=task-error-metrics,connector=*,task=*, 8.9.1. Key/Value kafka-configs.sh is part of the AMQ Streams distribution and can be found in the bin directory. Initializing a Jaeger tracer for Kafka clients, 16.2.2. Color Monitoring your cluster using JMX", Collapse section "8. Encryption and authentication", Collapse section "4.9. Enabling SASL PLAIN authentication, 4.9.7. MBeans matching kafka.streams:type=stream-[store-scope]-metrics,client-id=*,task-id=*,[store-scope]-id=*, 8.9.5. Kafka producer configuration tuning", Expand section "6.2. Configuring connectors in distributed Kafka Connect, 10. Once the limit is reached, the oldest messages will be removed. The most important configuration options are: The kafka-topics.sh tool can be used to manage topics. OAuth 2.0 Kafka broker configuration, 4.10.2.1. The followers replicate the leader. OReilly members experience live online training, plus books, videos, and digital content from nearly 200 publishers. Specify the options you want to remove in the option --remove-config. For each topic, the Kafka cluster maintains a partitioned log that looks like this: Docker example where kafka is the service. Data Persistence The messages which are past their retention policy will be deleted only when a new log segment is created. Compiler Instrumenting Kafka Streams applications for tracing, 16.3. Setting up tracing for Kafka clients", Collapse section "16.2. Url SHOW TOPICS does not display hidden topics by default, such as: Configuring OAuth 2.0 authentication, 4.10.6.1. Configuring ZooKeeper", Expand section "4.6. Create a topic using the kafka-topics.sh utility and specify the following: Topic replication factor in the --replication-factor option. OAuth 2.0 Kafka broker configuration", Expand section "4.10.5. Security Setting up AMQ Streams to use Kerberos (GSSAPI) authentication, 15. Whatever limit comes first will be used. The defaults for auto-created topics can be specified in the Kafka broker configuration using similar options: For list of all supported Kafka broker configuration options, see AppendixA, Broker configuration parameters. Data Type Function Setting up tracing for MirrorMaker and Kafka Connect", Collapse section "16.3. Increase visibility into IT operations to detect and resolve technical issues before they impact your business. OAuth 2.0 Kafka client configuration, 4.10.5. If the leader fails, one of the followers will automatically become the new leader. Data storage considerations", Expand section "3. For more information about the message retention configuration options, see Section5.5, Topic configuration. OAuth 2.0 authorization mechanism", Collapse section "4.11.1. Debugging Controlling transactional messages, 6.2.6. Upgrading client applications to the new Kafka version, 18.5.4. Downloading a Cruise Control archive, 15.3. Monitoring your cluster using JMX", Expand section "8.5. MBeans matching kafka.connect:type=connector-metrics,connector=*, 8.8.6. Enabling Server-to-server authentication using DIGEST-MD5, 3.4.3. MBeans matching kafka.connect:type=connector-task-metrics,connector=*,task=*, 8.8.7. Keep your systems secure with Red Hat's specialized responses to security vulnerabilities. You can also override some of the default topic configuration options using the option --config. Bidirectional replication (active/active), 10.2.2. Producing messages to topics and partitions, 13.2.4. Using OPA policy-based authorization", Expand section "6. That means that every message sent by a producer is always written only into a single partition. OAuth 2.0 authentication mechanisms", Expand section "4.10.2. Session re-authentication for Kafka brokers, 4.10.4. OAuth 2.0 client authentication flow, 4.10.5.1. AMQ Streams and Kafka upgrades", Collapse section "18. MBeans matching kafka.consumer:type=consumer-metrics,client-id=*, 8.7.2. Synchronizing data between Kafka clusters using MirrorMaker 2.0, 10.5. Kafka producer configuration tuning", Collapse section "6.1. Enabling tracing for Kafka Connect, 16.4. This is one partition and one replica. Adding the Kafka Streams API as a dependency to your Maven project, 13.1.3. Kafka Connect in distributed mode", Expand section "10. Take OReilly with you and learn anywhere, anytime on your phone and tablet. Data Processing Describe a topic using the kafka-topics.sh utility and specify the following: When the --topic option is omitted, it will describe all available topics. Tuning client configuration", Collapse section "6. Setting up tracing for MirrorMaker and Kafka Connect", Expand section "18. Privacy Policy Get Apache Kafka Series - Kafka Streams for Data Processing now with the OReilly learning platform. Cube Kafka Connect MBeans", Expand section "8.9. Enabling ZooKeeper ACLs for a new Kafka cluster, 4.8.3. Each server acts as a leader for some of its partitions and a follower for others so the load is well balanced within the cluster. OAuth 2.0 authorization mechanism", Expand section "4.12. Encryption and authentication", Expand section "4.10. View all OReilly videos, Superstream events, and Meet the Expert sessions on your home TV. Topic name must be specified in the --topic option. It is also possible to change a topics configuration after it has been created. This option can be used multiple times to override different options. Log, Measure Levels Configuring OPA authorization support, 4.13.1. This is configured through the delete.topic.enable property, which is set to true by default (that is, deleting topics is possible). Tuning client configuration", Expand section "6.1. Example of the command to get configuration of a topic named mytopic. and their active consumer counts. OAuth 2.0 client authentication flow", Collapse section "4.10.5. Cruise Control for cluster rebalancing, 15.2. Computer Web Services Thanks to the sharding of messages into different partitions, topics are easy to scale horizontally. Configuring and starting Cruise Control, 15.7. Kafka Streams MBeans", Expand section "9.1.

topic confluent consumer management monitoring graph stream The Kafka cluster stores streams of records in categories called topics. Kafka consumer configuration tuning", Collapse section "6.2. Order Using OAuth 2.0 token-based authentication, 4.10.1. Discrete Setting up tracing for MirrorMaker and Kafka Connect, 16.3.2. MBeans matching kafka.producer:type=producer-metrics,client-id=*,node-id=*, 8.6.3. DataBase MBeans matching kafka.streams:type=stream-metrics,client-id=*, 8.9.2. OAuth, Contact Infra As Code, Web It will also list all topic configuration options. Because compacting is a periodically executed action, it does not happen immediately when the new message with the same key are sent to the partition.

Downloading a Kafka Bridge archive, 13.1.6. MBeans matching kafka.streams:type=stream-record-cache-metrics,client-id=*,task-id=*,record-cache-id=*, 9.1.1. Text MBeans matching kafka.connect:type=connect-worker-metrics, 8.8.4. Creating reassignment JSON files manually, 8.3. When this property is set to false it will be not possible to delete topics and all attempts to delete topic will return success but the topic will not be deleted. Upgrading Kafka brokers to use the new inter-broker protocol version, 18.5.3. This behavior is controlled by the auto.create.topics.enable configuration property which is set to true by default. Kafka Bridge overview", Collapse section "13.1. Overview of AMQ Streams", Expand section "2.4.

Use --describe option to get the current configuration. Grammar Data (State) Data storage considerations", Collapse section "2.4. Enabling Client-to-server authentication using DIGEST-MD5, 4.8.2. Configuring Kafka Java clients to use OAuth 2.0, 4.11. The replication factor determines the number of replicas including the leader and the followers. Overview of AMQ Streams", Collapse section "1. Apache Kafka and ZooKeeper storage support, 2.5. File System For a topic with the compacted policy, the broker will always keep only the last message for each key. A topic is always split into one or more partitions. Using AMQ Streams with MirrorMaker 2.0, 10.2.1. Data Structure Using AMQ Streams with MirrorMaker 2.0", Expand section "10.2. Additionally, Kafka brokers support a compacting policy. Nominal OAuth 2.0 authentication configuration in the Kafka cluster, 4.10.2.3. Avoiding data loss or duplication when committing offsets", Expand section "7.1. Specify the options you want to add or change in the option --add-config. Reassignment of partitions", Collapse section "7.2. Data Quality The other replicas will be follower replicas. Running a single node AMQ Streams cluster, 3.3. To keep the two topics in sync you can either dual write to them from your client (using a transaction to keep them atomic) or, more cleanly, use Kafka Streams to copy one into the other. Running multi-node ZooKeeper cluster, 3.4.2. Configuring OAuth 2.0 authentication", Collapse section "4.10.6. Deploying the Kafka Bridge locally, 13.2.2. Using Kerberos (GSSAPI) authentication", Collapse section "14. ZooKeeper authentication", Expand section "4.7.1. Requests to the Kafka Bridge", Collapse section "13.1.2. topics that match any pattern in the ksql.hidden.topics configuration. Cluster configuration", Collapse section "10.2. [emailprotected] Setting up tracing for Kafka clients, 16.2.1. SHOW TOPICS lists the available topics in the Kafka cluster that ksqlDB is Messages in Kafka are always sent to or received from a topic. MBeans matching kafka.consumer:type=consumer-coordinator-metrics,client-id=*, 8.7.4. There's also live online events, interactive content, certification prep materials, and more. The kafka-topics.sh tool can be used to list and describe topics.

Use --describe option to get the current configuration. Grammar Data (State) Data storage considerations", Collapse section "2.4. Enabling Client-to-server authentication using DIGEST-MD5, 4.8.2. Configuring Kafka Java clients to use OAuth 2.0, 4.11. The replication factor determines the number of replicas including the leader and the followers. Overview of AMQ Streams", Collapse section "1. Apache Kafka and ZooKeeper storage support, 2.5. File System For a topic with the compacted policy, the broker will always keep only the last message for each key. A topic is always split into one or more partitions. Using AMQ Streams with MirrorMaker 2.0, 10.2.1. Data Structure Using AMQ Streams with MirrorMaker 2.0", Expand section "10.2. Additionally, Kafka brokers support a compacting policy. Nominal OAuth 2.0 authentication configuration in the Kafka cluster, 4.10.2.3. Avoiding data loss or duplication when committing offsets", Expand section "7.1. Specify the options you want to add or change in the option --add-config. Reassignment of partitions", Collapse section "7.2. Data Quality The other replicas will be follower replicas. Running a single node AMQ Streams cluster, 3.3. To keep the two topics in sync you can either dual write to them from your client (using a transaction to keep them atomic) or, more cleanly, use Kafka Streams to copy one into the other. Running multi-node ZooKeeper cluster, 3.4.2. Configuring OAuth 2.0 authentication", Collapse section "4.10.6. Deploying the Kafka Bridge locally, 13.2.2. Using Kerberos (GSSAPI) authentication", Collapse section "14. ZooKeeper authentication", Expand section "4.7.1. Requests to the Kafka Bridge", Collapse section "13.1.2. topics that match any pattern in the ksql.hidden.topics configuration. Cluster configuration", Collapse section "10.2. [emailprotected] Setting up tracing for Kafka clients, 16.2.1. SHOW TOPICS lists the available topics in the Kafka cluster that ksqlDB is Messages in Kafka are always sent to or received from a topic. MBeans matching kafka.consumer:type=consumer-coordinator-metrics,client-id=*, 8.7.4. There's also live online events, interactive content, certification prep materials, and more. The kafka-topics.sh tool can be used to list and describe topics.  Unidirectional replication (active/passive), 10.2.3. Dynamically change logging levels for Kafka broker loggers, 6.2.2. MBeans matching kafka.consumer:type=consumer-fetch-manager-metrics,client-id=*,topic=*, 8.7.6. For example, if you set the replication factor to 3, then there will one leader and two follower replicas. Until the parition has 1GB of messages. Browser Rebalance performance tuning overview, 15.11. ZooKeeper authentication", Collapse section "4.6. For a production environment you would have many more broker nodes, partitions, and replicas for scalability and resiliency. SHOW ALL TOPICS lists all topics, including hidden topics. MBeans matching kafka.consumer:type=consumer-metrics,client-id=*,node-id=*, 8.7.3. Simple ACL authorizer", Collapse section "4.7.1. Engage with our Red Hat Product Security team, access security updates, and ensure your environments are not exposed to any known security vulnerabilities. MBeans matching kafka.producer:type=producer-topic-metrics,client-id=*,topic=*, 8.7.1. Enabling SASL SCRAM authentication, 4.10. Retrieving the latest messages from a Kafka Bridge consumer, 13.2.7. When creating a topic you can configure the number of replicas using the replication factor. Testing Css These topics can be configured using dedicated Kafka broker configuration options starting with prefix offsets.topic. Cruise Control for cluster rebalancing", Collapse section "16. Ratio, Code Using OAuth 2.0 token-based authorization", Expand section "4.11.1. Configuring Kafka Connect in distributed mode, 9.2.2. Using Kerberos (GSSAPI) authentication", Expand section "15. Important Kafka broker metrics", Expand section "8.8. 2022, OReilly Media, Inc. All trademarks and registered trademarks appearing on oreilly.com are the property of their respective owners. Cryptography Data Type Kafka Connect in distributed mode", Collapse section "9.2. MBeans matching kafka.connect:type=sink-task-metrics,connector=*,task=*, 8.8.8. ZooKeeper authorization", Expand section "4.9. One of the replicas for given partition will be elected as a leader. Reassignment of partitions", Expand section "8. Shipping Kafka Streams API overview", Collapse section "12. kafka kubernetes portworx statefulset failing MBeans matching kafka.streams:type=stream-processor-node-metrics,client-id=*,task-id=*,processor-node-id=*, 8.9.4. AMQ Streams and Kafka upgrades", Expand section "18.4. OAuth 2.0 authentication mechanisms", Collapse section "4.10.1. Time Partitions act as shards. Kafka Streams API overview", Expand section "13.1. It can be defined based on time, partition size or both. MBeans matching kafka.consumer:type=consumer-fetch-manager-metrics,client-id=*, 8.7.5. The message retention policy defines how long the messages will be stored on the Kafka brokers. For a production environment you would have many more broker nodes, partitions, and replicas for scalability and resiliency. Using OPA policy-based authorization", Collapse section "4.12. Important Kafka broker metrics", Collapse section "8.5. MBeans matching kafka.connect:type=source-task-metrics,connector=*,task=*, 8.8.9. Operating System Data Warehouse Get full access to Apache Kafka Series - Kafka Streams for Data Processing and 60K+ other titles, with free 10-day trial of O'Reilly. It is possible to create Kafka topics dynamically; however, this relies on the Kafka brokers being configured to allow dynamic topics. Subscribing a Kafka Bridge consumer to topics, 13.2.5. Kafka Bridge overview", Expand section "13.1.2. Enabling tracing for the Kafka Bridge, 17.2. For 7 days or until the 1GB limit has been reached. Distributed tracing", Expand section "16.2. ZooKeeper authorization", Collapse section "4.8. Configuring Red Hat Single Sign-On as an OAuth 2.0 authorization server, 4.10.6.2. Data (State) Kafka Bridge quickstart", Expand section "14. Trigonometry, Modeling Upgrading to AMQ Streams 1.7", Collapse section "18.4. Cruise Control for cluster rebalancing", Collapse section "15. To disable it, set auto.create.topics.enable to false in the Kafka broker configuration file: Kafka offers the possibility to disable deletion of topics. Running Kafka Connect in standalone mode, 9.2.1. api tooling report use reports self help understanding each iii valid usage way Scaling Kafka clusters", Collapse section "7.1. Graph Example of the command to describe a topic named mytopic. Upgrading Kafka brokers and ZooKeeper, 18.5.1. Kafka Bridge quickstart", Collapse section "13.2. Configuring OAuth 2.0 authorization support, 4.12. Fast local JWT token validation configuration, 4.10.2.4. Adding Kafka clients as a dependency to your Maven project, 12.1. Get Mark Richardss Software Architecture Patterns ebook to better understand how to design componentsand how they should interact. Recovering from failure to avoid data loss, 6.2.8. Kafka Connect in standalone mode", Expand section "9.2. Enabling ZooKeeper ACLs in an existing Kafka cluster, 4.9.5. Minimizing the impact of rebalances, 7.2.2. OAuth 2.0 client authentication flow", Expand section "4.10.6. Distance create a topic named test with a single partition and only one replica: Docker Single Node (Multiple Service Broker + Zookeeper), Installation Standalone / Open Source (Single Broker), Kafka Connect - Sqlite in Standalone Mode, https://kafka.apache.org/documentation.html#newconsumerconfigs. AMQ Streams cluster is installed and running, For more information about topic configuration, see, For list of all supported topic configuration options, see, For more information about creating topics, see, Specify the host and port of the Kafka broker in the. Auto-created topics will use the default topic configuration which can be specified in the broker properties file. Statistics Simple ACL authorizer", Expand section "4.8. Please report any inaccuracies on this page or suggest an edit. Data Visualization The kafka-configs.sh tool can be used to modify topic configurations. The leader replica will be used by the producers to send new messages and by the consumers to consume messages. OAuth 2.0 authorization mechanism, 4.11.2. When a producer or consumer tries to send messages to or receive messages from a topic that does not exist, Kafka will, by default, automatically create that topic. Presenting Kafka Exporter metrics in Grafana, 18.4.1. Generating reassignment JSON files, 7.2.3. Use the kafka-configs.sh tool to get the current configuration. Lexical Parser Dimensional Modeling Each partition can have one or more replicas, which will be stored on different brokers in the cluster. Deploying the Cruise Control Metrics Reporter, 15.4. Example of the command to create a topic named mytopic. kafka-topics.sh is part of the AMQ Streams distribution and can be found in the bin directory. Kafka Exporter alerting rule examples, 17.5. Relational Modeling Avoiding data loss or duplication when committing offsets, 6.2.5.1. Process (Thread) Example of the command to change configuration of a topic named mytopic. Using OAuth 2.0 token-based authorization, 4.11.1. MBeans matching kafka.connect:type=task-error-metrics,connector=*,task=*, 8.9.1. Key/Value kafka-configs.sh is part of the AMQ Streams distribution and can be found in the bin directory. Initializing a Jaeger tracer for Kafka clients, 16.2.2. Color Monitoring your cluster using JMX", Collapse section "8. Encryption and authentication", Collapse section "4.9. Enabling SASL PLAIN authentication, 4.9.7. MBeans matching kafka.streams:type=stream-[store-scope]-metrics,client-id=*,task-id=*,[store-scope]-id=*, 8.9.5. Kafka producer configuration tuning", Expand section "6.2. Configuring connectors in distributed Kafka Connect, 10. Once the limit is reached, the oldest messages will be removed. The most important configuration options are: The kafka-topics.sh tool can be used to manage topics. OAuth 2.0 Kafka broker configuration, 4.10.2.1. The followers replicate the leader. OReilly members experience live online training, plus books, videos, and digital content from nearly 200 publishers. Specify the options you want to remove in the option --remove-config. For each topic, the Kafka cluster maintains a partitioned log that looks like this: Docker example where kafka is the service. Data Persistence The messages which are past their retention policy will be deleted only when a new log segment is created. Compiler Instrumenting Kafka Streams applications for tracing, 16.3. Setting up tracing for Kafka clients", Collapse section "16.2. Url SHOW TOPICS does not display hidden topics by default, such as: Configuring OAuth 2.0 authentication, 4.10.6.1. Configuring ZooKeeper", Expand section "4.6. Create a topic using the kafka-topics.sh utility and specify the following: Topic replication factor in the --replication-factor option. OAuth 2.0 Kafka broker configuration", Expand section "4.10.5. Security Setting up AMQ Streams to use Kerberos (GSSAPI) authentication, 15. Whatever limit comes first will be used. The defaults for auto-created topics can be specified in the Kafka broker configuration using similar options: For list of all supported Kafka broker configuration options, see AppendixA, Broker configuration parameters. Data Type Function Setting up tracing for MirrorMaker and Kafka Connect", Collapse section "16.3. Increase visibility into IT operations to detect and resolve technical issues before they impact your business. OAuth 2.0 Kafka client configuration, 4.10.5. If the leader fails, one of the followers will automatically become the new leader. Data storage considerations", Expand section "3. For more information about the message retention configuration options, see Section5.5, Topic configuration. OAuth 2.0 authorization mechanism", Collapse section "4.11.1. Debugging Controlling transactional messages, 6.2.6. Upgrading client applications to the new Kafka version, 18.5.4. Downloading a Cruise Control archive, 15.3. Monitoring your cluster using JMX", Expand section "8.5. MBeans matching kafka.connect:type=connector-metrics,connector=*, 8.8.6. Enabling Server-to-server authentication using DIGEST-MD5, 3.4.3. MBeans matching kafka.connect:type=connector-task-metrics,connector=*,task=*, 8.8.7. Keep your systems secure with Red Hat's specialized responses to security vulnerabilities. You can also override some of the default topic configuration options using the option --config. Bidirectional replication (active/active), 10.2.2. Producing messages to topics and partitions, 13.2.4. Using OPA policy-based authorization", Expand section "6. That means that every message sent by a producer is always written only into a single partition. OAuth 2.0 authentication mechanisms", Expand section "4.10.2. Session re-authentication for Kafka brokers, 4.10.4. OAuth 2.0 client authentication flow, 4.10.5.1. AMQ Streams and Kafka upgrades", Collapse section "18. MBeans matching kafka.consumer:type=consumer-metrics,client-id=*, 8.7.2. Synchronizing data between Kafka clusters using MirrorMaker 2.0, 10.5. Kafka producer configuration tuning", Collapse section "6.1. Enabling tracing for Kafka Connect, 16.4. This is one partition and one replica. Adding the Kafka Streams API as a dependency to your Maven project, 13.1.3. Kafka Connect in distributed mode", Expand section "10. Take OReilly with you and learn anywhere, anytime on your phone and tablet. Data Processing Describe a topic using the kafka-topics.sh utility and specify the following: When the --topic option is omitted, it will describe all available topics. Tuning client configuration", Collapse section "6. Setting up tracing for MirrorMaker and Kafka Connect", Expand section "18. Privacy Policy Get Apache Kafka Series - Kafka Streams for Data Processing now with the OReilly learning platform. Cube Kafka Connect MBeans", Expand section "8.9. Enabling ZooKeeper ACLs for a new Kafka cluster, 4.8.3. Each server acts as a leader for some of its partitions and a follower for others so the load is well balanced within the cluster. OAuth 2.0 authorization mechanism", Expand section "4.12. Encryption and authentication", Expand section "4.10. View all OReilly videos, Superstream events, and Meet the Expert sessions on your home TV. Topic name must be specified in the --topic option. It is also possible to change a topics configuration after it has been created. This option can be used multiple times to override different options. Log, Measure Levels Configuring OPA authorization support, 4.13.1. This is configured through the delete.topic.enable property, which is set to true by default (that is, deleting topics is possible). Tuning client configuration", Expand section "6.1. Example of the command to get configuration of a topic named mytopic. and their active consumer counts. OAuth 2.0 client authentication flow", Collapse section "4.10.5. Cruise Control for cluster rebalancing, 15.2. Computer Web Services Thanks to the sharding of messages into different partitions, topics are easy to scale horizontally. Configuring and starting Cruise Control, 15.7. Kafka Streams MBeans", Expand section "9.1. topic confluent consumer management monitoring graph stream The Kafka cluster stores streams of records in categories called topics. Kafka consumer configuration tuning", Collapse section "6.2. Order Using OAuth 2.0 token-based authentication, 4.10.1. Discrete Setting up tracing for MirrorMaker and Kafka Connect, 16.3.2. MBeans matching kafka.producer:type=producer-metrics,client-id=*,node-id=*, 8.6.3. DataBase MBeans matching kafka.streams:type=stream-metrics,client-id=*, 8.9.2. OAuth, Contact Infra As Code, Web It will also list all topic configuration options. Because compacting is a periodically executed action, it does not happen immediately when the new message with the same key are sent to the partition.

Unidirectional replication (active/passive), 10.2.3. Dynamically change logging levels for Kafka broker loggers, 6.2.2. MBeans matching kafka.consumer:type=consumer-fetch-manager-metrics,client-id=*,topic=*, 8.7.6. For example, if you set the replication factor to 3, then there will one leader and two follower replicas. Until the parition has 1GB of messages. Browser Rebalance performance tuning overview, 15.11. ZooKeeper authentication", Collapse section "4.6. For a production environment you would have many more broker nodes, partitions, and replicas for scalability and resiliency. SHOW ALL TOPICS lists all topics, including hidden topics. MBeans matching kafka.consumer:type=consumer-metrics,client-id=*,node-id=*, 8.7.3. Simple ACL authorizer", Collapse section "4.7.1. Engage with our Red Hat Product Security team, access security updates, and ensure your environments are not exposed to any known security vulnerabilities. MBeans matching kafka.producer:type=producer-topic-metrics,client-id=*,topic=*, 8.7.1. Enabling SASL SCRAM authentication, 4.10. Retrieving the latest messages from a Kafka Bridge consumer, 13.2.7. When creating a topic you can configure the number of replicas using the replication factor. Testing Css These topics can be configured using dedicated Kafka broker configuration options starting with prefix offsets.topic. Cruise Control for cluster rebalancing", Collapse section "16. Ratio, Code Using OAuth 2.0 token-based authorization", Expand section "4.11.1. Configuring Kafka Connect in distributed mode, 9.2.2. Using Kerberos (GSSAPI) authentication", Expand section "15. Important Kafka broker metrics", Expand section "8.8. 2022, OReilly Media, Inc. All trademarks and registered trademarks appearing on oreilly.com are the property of their respective owners. Cryptography Data Type Kafka Connect in distributed mode", Collapse section "9.2. MBeans matching kafka.connect:type=sink-task-metrics,connector=*,task=*, 8.8.8. ZooKeeper authorization", Expand section "4.9. One of the replicas for given partition will be elected as a leader. Reassignment of partitions", Expand section "8. Shipping Kafka Streams API overview", Collapse section "12. kafka kubernetes portworx statefulset failing MBeans matching kafka.streams:type=stream-processor-node-metrics,client-id=*,task-id=*,processor-node-id=*, 8.9.4. AMQ Streams and Kafka upgrades", Expand section "18.4. OAuth 2.0 authentication mechanisms", Collapse section "4.10.1. Time Partitions act as shards. Kafka Streams API overview", Expand section "13.1. It can be defined based on time, partition size or both. MBeans matching kafka.consumer:type=consumer-fetch-manager-metrics,client-id=*, 8.7.5. The message retention policy defines how long the messages will be stored on the Kafka brokers. For a production environment you would have many more broker nodes, partitions, and replicas for scalability and resiliency. Using OPA policy-based authorization", Collapse section "4.12. Important Kafka broker metrics", Collapse section "8.5. MBeans matching kafka.connect:type=source-task-metrics,connector=*,task=*, 8.8.9. Operating System Data Warehouse Get full access to Apache Kafka Series - Kafka Streams for Data Processing and 60K+ other titles, with free 10-day trial of O'Reilly. It is possible to create Kafka topics dynamically; however, this relies on the Kafka brokers being configured to allow dynamic topics. Subscribing a Kafka Bridge consumer to topics, 13.2.5. Kafka Bridge overview", Expand section "13.1.2. Enabling tracing for the Kafka Bridge, 17.2. For 7 days or until the 1GB limit has been reached. Distributed tracing", Expand section "16.2. ZooKeeper authorization", Collapse section "4.8. Configuring Red Hat Single Sign-On as an OAuth 2.0 authorization server, 4.10.6.2. Data (State) Kafka Bridge quickstart", Expand section "14. Trigonometry, Modeling Upgrading to AMQ Streams 1.7", Collapse section "18.4. Cruise Control for cluster rebalancing", Collapse section "15. To disable it, set auto.create.topics.enable to false in the Kafka broker configuration file: Kafka offers the possibility to disable deletion of topics. Running Kafka Connect in standalone mode, 9.2.1. api tooling report use reports self help understanding each iii valid usage way Scaling Kafka clusters", Collapse section "7.1. Graph Example of the command to describe a topic named mytopic. Upgrading Kafka brokers and ZooKeeper, 18.5.1. Kafka Bridge quickstart", Collapse section "13.2. Configuring OAuth 2.0 authorization support, 4.12. Fast local JWT token validation configuration, 4.10.2.4. Adding Kafka clients as a dependency to your Maven project, 12.1. Get Mark Richardss Software Architecture Patterns ebook to better understand how to design componentsand how they should interact. Recovering from failure to avoid data loss, 6.2.8. Kafka Connect in standalone mode", Expand section "9.2. Enabling ZooKeeper ACLs in an existing Kafka cluster, 4.9.5. Minimizing the impact of rebalances, 7.2.2. OAuth 2.0 client authentication flow", Expand section "4.10.6. Distance create a topic named test with a single partition and only one replica: Docker Single Node (Multiple Service Broker + Zookeeper), Installation Standalone / Open Source (Single Broker), Kafka Connect - Sqlite in Standalone Mode, https://kafka.apache.org/documentation.html#newconsumerconfigs. AMQ Streams cluster is installed and running, For more information about topic configuration, see, For list of all supported topic configuration options, see, For more information about creating topics, see, Specify the host and port of the Kafka broker in the. Auto-created topics will use the default topic configuration which can be specified in the broker properties file. Statistics Simple ACL authorizer", Expand section "4.8. Please report any inaccuracies on this page or suggest an edit. Data Visualization The kafka-configs.sh tool can be used to modify topic configurations. The leader replica will be used by the producers to send new messages and by the consumers to consume messages. OAuth 2.0 authorization mechanism, 4.11.2. When a producer or consumer tries to send messages to or receive messages from a topic that does not exist, Kafka will, by default, automatically create that topic. Presenting Kafka Exporter metrics in Grafana, 18.4.1. Generating reassignment JSON files, 7.2.3. Use the kafka-configs.sh tool to get the current configuration. Lexical Parser Dimensional Modeling Each partition can have one or more replicas, which will be stored on different brokers in the cluster. Deploying the Cruise Control Metrics Reporter, 15.4. Example of the command to create a topic named mytopic. kafka-topics.sh is part of the AMQ Streams distribution and can be found in the bin directory. Kafka Exporter alerting rule examples, 17.5. Relational Modeling Avoiding data loss or duplication when committing offsets, 6.2.5.1. Process (Thread) Example of the command to change configuration of a topic named mytopic. Using OAuth 2.0 token-based authorization, 4.11.1. MBeans matching kafka.connect:type=task-error-metrics,connector=*,task=*, 8.9.1. Key/Value kafka-configs.sh is part of the AMQ Streams distribution and can be found in the bin directory. Initializing a Jaeger tracer for Kafka clients, 16.2.2. Color Monitoring your cluster using JMX", Collapse section "8. Encryption and authentication", Collapse section "4.9. Enabling SASL PLAIN authentication, 4.9.7. MBeans matching kafka.streams:type=stream-[store-scope]-metrics,client-id=*,task-id=*,[store-scope]-id=*, 8.9.5. Kafka producer configuration tuning", Expand section "6.2. Configuring connectors in distributed Kafka Connect, 10. Once the limit is reached, the oldest messages will be removed. The most important configuration options are: The kafka-topics.sh tool can be used to manage topics. OAuth 2.0 Kafka broker configuration, 4.10.2.1. The followers replicate the leader. OReilly members experience live online training, plus books, videos, and digital content from nearly 200 publishers. Specify the options you want to remove in the option --remove-config. For each topic, the Kafka cluster maintains a partitioned log that looks like this: Docker example where kafka is the service. Data Persistence The messages which are past their retention policy will be deleted only when a new log segment is created. Compiler Instrumenting Kafka Streams applications for tracing, 16.3. Setting up tracing for Kafka clients", Collapse section "16.2. Url SHOW TOPICS does not display hidden topics by default, such as: Configuring OAuth 2.0 authentication, 4.10.6.1. Configuring ZooKeeper", Expand section "4.6. Create a topic using the kafka-topics.sh utility and specify the following: Topic replication factor in the --replication-factor option. OAuth 2.0 Kafka broker configuration", Expand section "4.10.5. Security Setting up AMQ Streams to use Kerberos (GSSAPI) authentication, 15. Whatever limit comes first will be used. The defaults for auto-created topics can be specified in the Kafka broker configuration using similar options: For list of all supported Kafka broker configuration options, see AppendixA, Broker configuration parameters. Data Type Function Setting up tracing for MirrorMaker and Kafka Connect", Collapse section "16.3. Increase visibility into IT operations to detect and resolve technical issues before they impact your business. OAuth 2.0 Kafka client configuration, 4.10.5. If the leader fails, one of the followers will automatically become the new leader. Data storage considerations", Expand section "3. For more information about the message retention configuration options, see Section5.5, Topic configuration. OAuth 2.0 authorization mechanism", Collapse section "4.11.1. Debugging Controlling transactional messages, 6.2.6. Upgrading client applications to the new Kafka version, 18.5.4. Downloading a Cruise Control archive, 15.3. Monitoring your cluster using JMX", Expand section "8.5. MBeans matching kafka.connect:type=connector-metrics,connector=*, 8.8.6. Enabling Server-to-server authentication using DIGEST-MD5, 3.4.3. MBeans matching kafka.connect:type=connector-task-metrics,connector=*,task=*, 8.8.7. Keep your systems secure with Red Hat's specialized responses to security vulnerabilities. You can also override some of the default topic configuration options using the option --config. Bidirectional replication (active/active), 10.2.2. Producing messages to topics and partitions, 13.2.4. Using OPA policy-based authorization", Expand section "6. That means that every message sent by a producer is always written only into a single partition. OAuth 2.0 authentication mechanisms", Expand section "4.10.2. Session re-authentication for Kafka brokers, 4.10.4. OAuth 2.0 client authentication flow, 4.10.5.1. AMQ Streams and Kafka upgrades", Collapse section "18. MBeans matching kafka.consumer:type=consumer-metrics,client-id=*, 8.7.2. Synchronizing data between Kafka clusters using MirrorMaker 2.0, 10.5. Kafka producer configuration tuning", Collapse section "6.1. Enabling tracing for Kafka Connect, 16.4. This is one partition and one replica. Adding the Kafka Streams API as a dependency to your Maven project, 13.1.3. Kafka Connect in distributed mode", Expand section "10. Take OReilly with you and learn anywhere, anytime on your phone and tablet. Data Processing Describe a topic using the kafka-topics.sh utility and specify the following: When the --topic option is omitted, it will describe all available topics. Tuning client configuration", Collapse section "6. Setting up tracing for MirrorMaker and Kafka Connect", Expand section "18. Privacy Policy Get Apache Kafka Series - Kafka Streams for Data Processing now with the OReilly learning platform. Cube Kafka Connect MBeans", Expand section "8.9. Enabling ZooKeeper ACLs for a new Kafka cluster, 4.8.3. Each server acts as a leader for some of its partitions and a follower for others so the load is well balanced within the cluster. OAuth 2.0 authorization mechanism", Expand section "4.12. Encryption and authentication", Expand section "4.10. View all OReilly videos, Superstream events, and Meet the Expert sessions on your home TV. Topic name must be specified in the --topic option. It is also possible to change a topics configuration after it has been created. This option can be used multiple times to override different options. Log, Measure Levels Configuring OPA authorization support, 4.13.1. This is configured through the delete.topic.enable property, which is set to true by default (that is, deleting topics is possible). Tuning client configuration", Expand section "6.1. Example of the command to get configuration of a topic named mytopic. and their active consumer counts. OAuth 2.0 client authentication flow", Collapse section "4.10.5. Cruise Control for cluster rebalancing, 15.2. Computer Web Services Thanks to the sharding of messages into different partitions, topics are easy to scale horizontally. Configuring and starting Cruise Control, 15.7. Kafka Streams MBeans", Expand section "9.1. topic confluent consumer management monitoring graph stream The Kafka cluster stores streams of records in categories called topics. Kafka consumer configuration tuning", Collapse section "6.2. Order Using OAuth 2.0 token-based authentication, 4.10.1. Discrete Setting up tracing for MirrorMaker and Kafka Connect, 16.3.2. MBeans matching kafka.producer:type=producer-metrics,client-id=*,node-id=*, 8.6.3. DataBase MBeans matching kafka.streams:type=stream-metrics,client-id=*, 8.9.2. OAuth, Contact Infra As Code, Web It will also list all topic configuration options. Because compacting is a periodically executed action, it does not happen immediately when the new message with the same key are sent to the partition.  Downloading a Kafka Bridge archive, 13.1.6. MBeans matching kafka.streams:type=stream-record-cache-metrics,client-id=*,task-id=*,record-cache-id=*, 9.1.1. Text MBeans matching kafka.connect:type=connect-worker-metrics, 8.8.4. Creating reassignment JSON files manually, 8.3. When this property is set to false it will be not possible to delete topics and all attempts to delete topic will return success but the topic will not be deleted. Upgrading Kafka brokers to use the new inter-broker protocol version, 18.5.3. This behavior is controlled by the auto.create.topics.enable configuration property which is set to true by default. Kafka Bridge overview", Collapse section "13.1. Overview of AMQ Streams", Expand section "2.4.

Downloading a Kafka Bridge archive, 13.1.6. MBeans matching kafka.streams:type=stream-record-cache-metrics,client-id=*,task-id=*,record-cache-id=*, 9.1.1. Text MBeans matching kafka.connect:type=connect-worker-metrics, 8.8.4. Creating reassignment JSON files manually, 8.3. When this property is set to false it will be not possible to delete topics and all attempts to delete topic will return success but the topic will not be deleted. Upgrading Kafka brokers to use the new inter-broker protocol version, 18.5.3. This behavior is controlled by the auto.create.topics.enable configuration property which is set to true by default. Kafka Bridge overview", Collapse section "13.1. Overview of AMQ Streams", Expand section "2.4.